(Pre-requisite)

(AST405) Lifetime data analysis

0 Linear Regression Models

0.1 Multiple Linear Regression Models

Multiple Linear Regression Models

-

Let \(\{(y_i, x_{i1}, \ldots, x_{ip}), i=1, \ldots, n\}\) be the data obtained from the \(i^{th}\) subject

\(y_i\,\rightarrow\) response

\(x_{ij}\,\rightarrow\) \(jth\) independent variable

A multiple linear regression model \[ y_i = \beta_0 + \beta_1 x_{i1} + \cdots + \beta_p x_{ip} + \epsilon_{ij} \]

-

In matrix notation \[ \mathbf{y} = \mathbf{x}'\boldsymbol{\beta} + \boldsymbol{\epsilon} \]

\(\mathbf{y} = (y_1, \ldots, y_n)'\)

\(\mathbf{x}\,\rightarrow\) \(n\times (p+1)\) matrix with first column is a vector of one’s

\(\boldsymbol{\beta} = (\beta_0, \beta_1, \ldots, \beta_p)'\)

\(\epsilon_{ij}\rightarrow\) error term

-

General assumptions

\(\epsilon\)’s are independent

\(E(\epsilon_{ij}) = 0\)

\(V(\epsilon_{ij}) = \sigma^2\)

\(\epsilon_{ij}\sim N(0, \sigma^2)\)

-

The fitted model \[ \hat{y}_i = \hat{\beta}_0 + \hat{\beta}_1 x_{i1} + \cdots + \hat{\beta}_p x_{ip} \]

Ordinary least squares or maximum likelihood estimators \[ \hat{\boldsymbol{\beta}} = (\mathbf{x}'\mathbf{x})^{-1}\mathbf{x}'\mathbf{y} \]

Asymptotically \(\hat{\boldsymbol{\beta}}\) follows a normal distribution with mean vector \(\boldsymbol{\beta}\) and variance-covariance matrix \((\mathbf{x}'\mathbf{x})^{-1}\sigma^2\)

-

Residuals \[ \hat{\boldsymbol{\epsilon}} = \mathbf{y} - \hat{\mathbf{y}} \]

Estimate of error variance \[ \hat{\sigma}^2 = \frac{\boldsymbol{\epsilon}'\boldsymbol{\epsilon}}{n-p-1} \]

Residuals are used for model diagnostics

- Statistical inference regarding multiple linear regression models are based on t-test, F-test, and chi-square test

\[ \begin{aligned}H_{01}&: \beta_j=0 \; (j=1, \ldots, p)\\ H_{02}&: \beta_1 = \cdots = \beta_p = 0\\ H_{03}&: \beta_1 = \cdots = \beta_q =0 \; (q<p)\\ H_{04}&: \beta_1 = \cdots = \beta_q \; (q<p) \end{aligned} \]

0.2 An example with data on inheritance of height

Inheritance of height

-

During 1893–1898 in the UK, K. Pearson (a famous statistician) organized the collection of heights of 1375 mothers aged 65 or less and one of their adult daughters aged 18 or more (Pearson and Lee 1903)

Mother height \((x)\) \(\rightarrow\) predictor

Daughter height \((y)\) \(\rightarrow\) response

Does taller mother tend to have taller daughter?

- Assumed model “Daughter height on mother height” \[ y_i = \beta_0 + \beta_1 x_i + \epsilon_i \]

Inheritance of height

- The data

heights

- Transform

Heightsfromdata.frame()totibble()

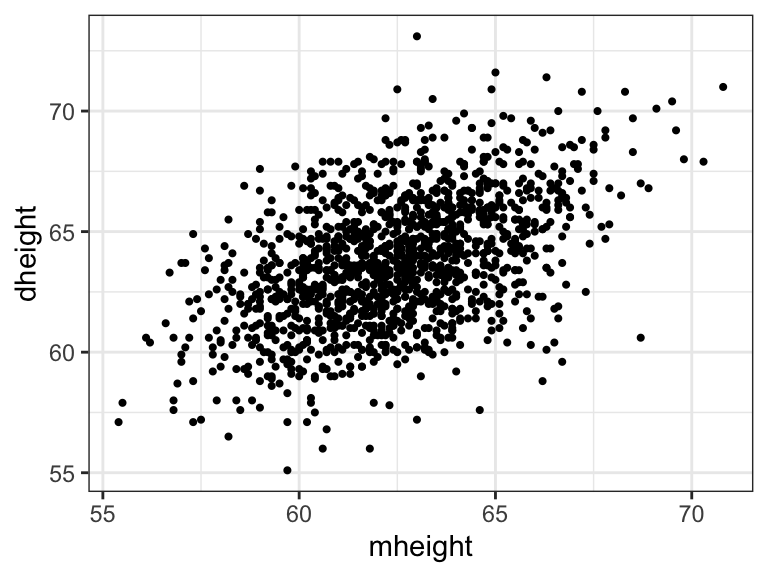

heights <- as_tibble(Heights)Scatterplot of mother height and daughter height

ggplot(heights) +

geom_point(aes(mheight, dheight), size = .75) +

theme_bw()

0.3 Model fit

lm()

-

lm()is the most popular R function to fit linear model (with continuous response)- A typical syntax of

lm()functionlm(formula, data), which returns alist

- A typical syntax of

For example, codes for fitting the model “Daughter height on mother height”

mod_h <- lm(formula = dheight ~ mheight,

data = heights)- Elements of

lm()output object contain useful objects related to the corresponding fit of linear model

names(mod_h) [1] "coefficients" "residuals" "effects" "rank"

[5] "fitted.values" "assign" "qr" "df.residual"

[9] "xlevels" "call" "terms" "model" mod_h$coefficients(Intercept) mheight

29.917437 0.541747 - Elements of

lm()output object contain useful objects related to the corresponding fit of linear model

[1] "call" "terms" "residuals" "coefficients"

[5] "aliased" "sigma" "df" "r.squared"

[9] "adj.r.squared" "fstatistic" "cov.unscaled" summary(mod_h)$coefficients Estimate Std. Error t value Pr(>|t|)

(Intercept) 29.917437 1.62246940 18.43945 5.211879e-68

mheight 0.541747 0.02596069 20.86797 3.216915e-84-

Output of

lm()object can also be used as an argument of some useful functions, such ascoefficients()returns the estimates of regression parametersresiduals()returns associated residuals (there are different types of residuals, usetypeargument to specify this)fitted()returns fitted values corresponding to the predictor values of the dataanova()returns ANOVA tablesummary()returns objects related to linear model fits, some of them are not included in thelm()objectconfint()returns confidence intervals of the regression parameters

0.4 broom package

broom

-

Most of the built-in R objects related to model fits (e.g.

lm(),t.test(), etc.) require tidy data as input, but its outputs are messy (not tidy), which cannot be used as input for the methods oftidyverse- e.g.

lm()returns a list, not a data frame

- e.g.

broompackage has functions that transform messy data into tidy data, which are used as inputs of differenttidyversefunctions, such asggplot(),kable(), etc.

-

broomhas three functions that takes model fit object as an argument and returns atibble(tidy data)glance()returns a signle row summary of the model fit, which contains estimates of coefficient of determination, error variance, etc.tidy()returns different values corresponding to each parameter, such as estimates, t-stat, p-value, etc.augment()returns fitted information corresponding to each observations, e.g. residuals, fitted values, SE of fitted values, etc.

glance()

Single row summary of the model fit

glance(mod_h)# A tibble: 1 × 12

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0.241 0.240 2.27 435. 3.22e-84 1 -3075. 6156. 6172.

# ℹ 3 more variables: deviance <dbl>, df.residual <int>, nobs <int>tidy()

Summary of the parameter estimates

tidy(mod_h)# A tibble: 2 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 29.9 1.62 18.4 5.21e-68

2 mheight 0.542 0.0260 20.9 3.22e-84augment()

Observation-wise values of model fit

augment(mod_h) %>%

slice(1:6)# A tibble: 6 × 8

dheight mheight .fitted .resid .hat .sigma .cooksd .std.resid

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 55.1 59.7 62.3 -7.16 0.00172 2.26 0.00862 -3.16

2 56.5 58.2 61.4 -4.95 0.00310 2.26 0.00743 -2.19

3 56 60.6 62.7 -6.75 0.00118 2.26 0.00523 -2.98

4 56.8 60.7 62.8 -6.00 0.00113 2.26 0.00397 -2.65

5 56 61.8 63.4 -7.40 0.000783 2.26 0.00418 -3.27

6 57.9 55.5 60.0 -2.08 0.00707 2.27 0.00303 -0.9230.5 Model diagnostics

Model diagnostics

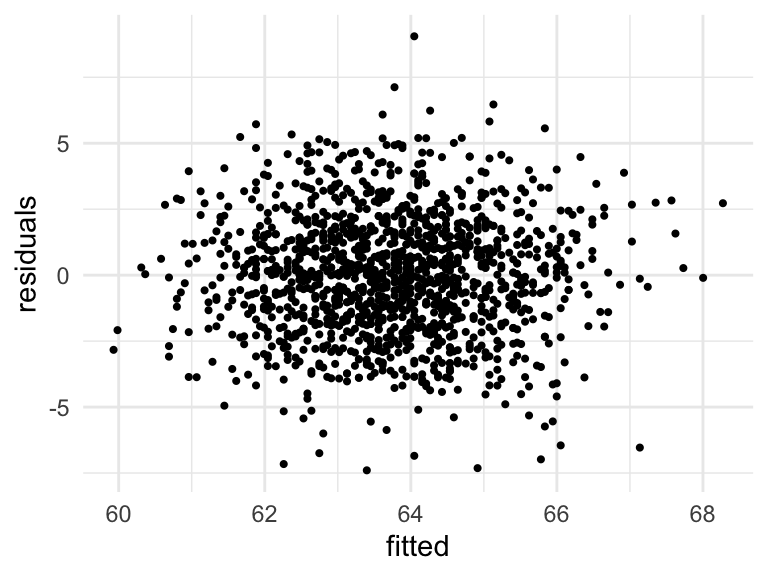

Residual vs fitted (Independence of errors, Constant variance)

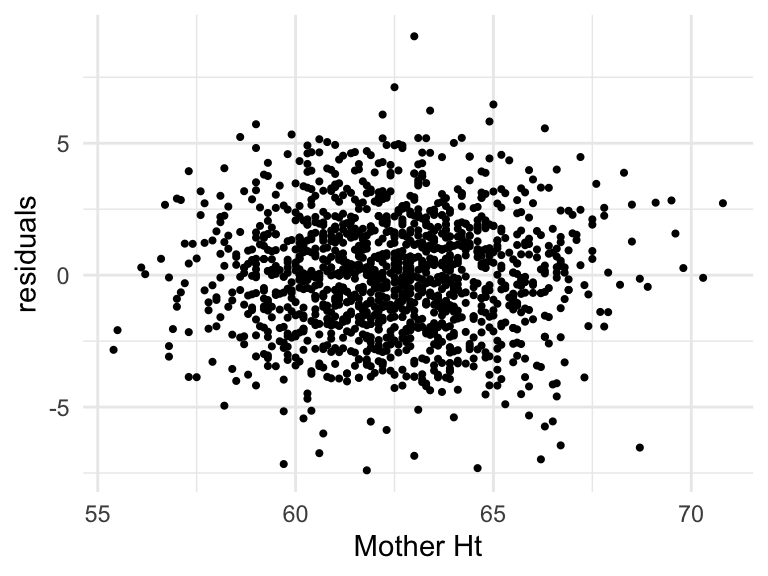

Residual vs predictor (Linearity, Zero mean)

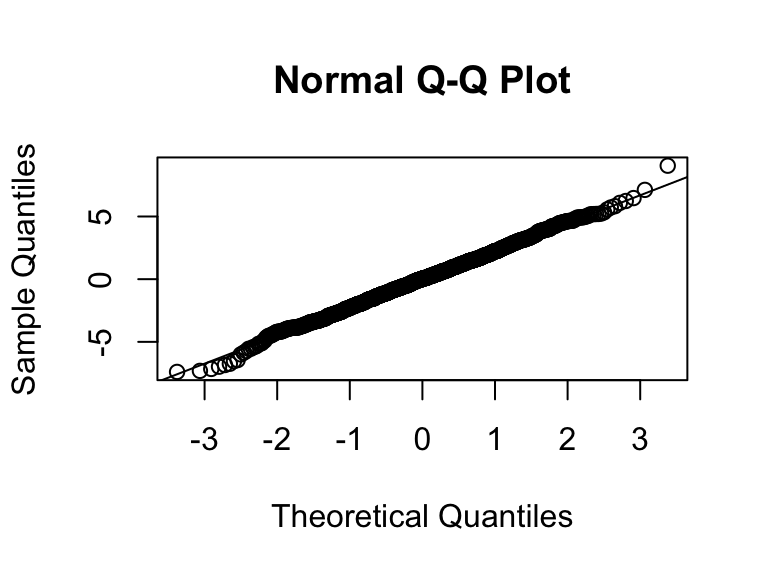

Q-Q normal plot of residuals (Normality of error)

Residual plots

Scatterplot of fitted values and residuals

ggplot(augment(mod_h)) +

geom_point(aes(.fitted, .resid), size = .75) +

labs(x = "fitted", y = "residuals") +

theme_minimal()

Scatterplot of predictor and residuals

ggplot(augment(mod_h)) +

geom_point(aes(mheight, .resid), size = .75) +

labs(x = "Mother Ht", y = "residuals") +

theme_minimal()

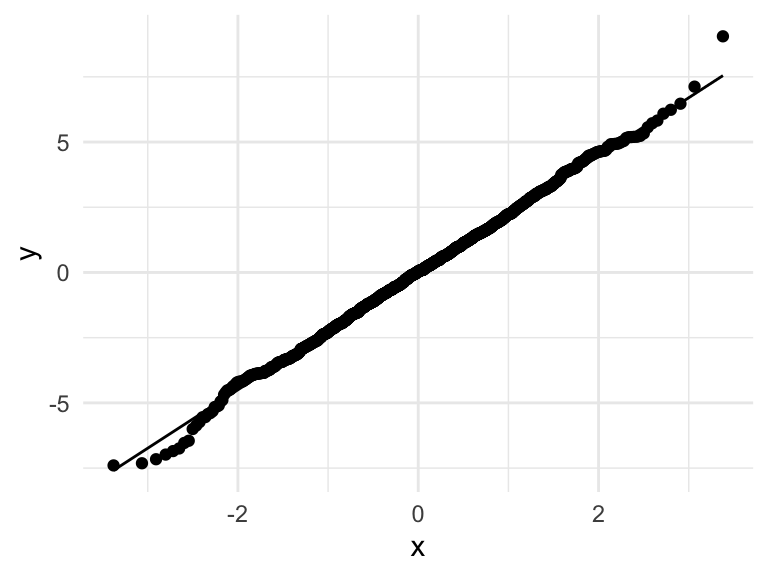

Q-Q normal plot

Using base R functions

- For Q-Q plot,

ggplot2functionsstat_qq()andstat_qq_line()can be used

ggplot(augment(mod_h), aes(sample = .resid)) +

stat_qq() +

stat_qq_line() +

theme_minimal()

Summary

Exercise

-

Using the FEV data (Download the FEV data), fit the following models and perform the model diagnostics

fevonAgefevonAgeandHgt

0.6 Regression models with categorical predictors

Regression models with categorical predictors

-

In general, for a predictor with two levels, define a dummy or binary variable that takes the values 1 and 0 corresponding to the two levels of the original variable

For example, if the variable \(X\) has the levels “male” and “female”, we can define a dummy variable \(D\) such that \[ D= \begin{cases} 1 & \text{if $X = \text{male}$} \\ 0 & \text{if $X = \text{female}$} \end{cases} \]

The level “female” is considered as the “reference” category in this case

Dummy variable can also be defined with “male” as the reference category

-

The model “\(Y\) on \(X\)” can be expressed in terms of “\(D\)” as \[ E(Y\,\vert\, D) = \beta_0 + \beta_1 D \]

\(\beta_0 = E(Y\,\vert\,D=0)\)

\(\beta_1 = E(Y\,\vert\,D=1) - E(Y\,\vert\,D=0)\)

Interpretations of regression parameters depend on the reference category considered

For a categorical variable with more that two categories, more than one dummy variables needed to be defined

-

Let \(X\) be a categorical variable with three categories, say “poor”, “middle”, and “rich”

To consider \(X\) as a predictor, two dummy variables need to be defined \[ \begin{aligned} D_1 = \begin{cases} 1 & \text{if $X=\text{poor}$} \\ 0 & \text{otherwise} \end{cases} &\;\;\; D_2 = \begin{cases} 1 & \text{if $X=\text{middle}$} \\ 0 & \text{otherwise} \end{cases} \end{aligned} \]

In this case, the category “rich” is considered as the reference category and dummy variables can be defined with other category as the reference

-

The regression model “\(Y\) on \(X\)”, where \(X\) has three categories can be defined as \[ E(Y\,\vert\,D_1, D_2) = \beta_0 + \beta_1 D_1 + \beta_2 D_2 \]

\(\beta_0= E(Y\,\vert\,D_1=0, D_2=0)\)

\(\beta_1 = E(Y\,\vert\,D_1=1, D_2=0) - E(Y\,\vert\,D_1=0, D_2=0)\)

\(\beta_2 = E(Y\,\vert\,D_1=0, D_2=1) - E(Y\,\vert\,D_1=0, D_2=0)\)

0.7 Interaction

Regression models with one categorical and one continuous predictors

Consider a regression model “\(Y\) on \(X_1\) and \(X_2\)”, where \(X_1\) has two levels (“male” and “female”) and \(X_2\) is continuous (say age in years)

-

Define a dummay variable for \(X_1\)

- \(D_{1M} = I(X_1 = \text{Male})\)

Consider the models \[ \begin{aligned} (1) \;\; E(Y\,\vert\, D_{1M}, X_2) & = \beta_0 + \beta_1 D_{1M} + \beta_2 X_2 \\ (2) \;\; E(Y\,\vert\, D_{1M}, X_2) & = \beta_0 + \beta_1 D_{1M} + \beta_2 X_2 + \theta D_{1M} X_2 \end{aligned} \]

- How would you interpret the parameters in model (1)?

- How would you interpret \(\theta\) in model (2)?

\[ \begin{aligned} (2) \;\; E(Y\,\vert\, D_{1M}, X_2) &= \beta_0 + \beta_1 D_{1M} + \beta_2 X_2 + \theta D_{1M} X_2 \\ & = \begin{cases} \beta_0 + \beta_2 X_2 & \text{for female} \\ (\beta_0 + \beta_1 + (\beta_2 + \theta) X_2 &\text{for male} \end{cases} \end{aligned} \]

\(\beta_0\,\rightarrow\) mean response of female of age 0

\(\beta_1\,\rightarrow\) difference of mean response between male and female when both of them at age of 0

\(\beta_2\,\rightarrow\) change of mean response for 1 year change in age for female

-

\(\theta\,\rightarrow\) difference in the change of the mean response between males and females when their age changes by 1 year

- it represents how the effect of age differs between males and females

Regression model with two categorical variables

Consider a regression model “\(Y\) on \(X_1\) and \(X_2\)”, where \(X_1\) has two levels (“male” and “female”) and \(X_2\) has three levels (“poor”, “middle”, and “rich”)

-

Define the dummy variables for the categorical variables \(X_1\) and \(X_2\)

For \(X_1\), \(D_{1M} = I(X_1=\text{male})\)

For \(X_2\), \(D_{21} = I(X_2 = \text{poor})\) and \(D_{22} = I(X_2 = \text{middle})\)

Model 1

\[ E(Y\,\vert\, D_{1M}, D_{21}, D_{22}) = \beta_0 + \beta_1D_{1M} + \beta_{21}D_{21} + \beta_{22} D_{22} \]

\(\beta_0\,\rightarrow\) mean response of rich female individuals

\(\beta_1\,\rightarrow\) mean difference between male and female when \(X_2\) is fixed

\(\beta_{21}\,\rightarrow\) mean difference between poor and rich when \(X_1\) is fixed

\(\beta_{22}\,\rightarrow\) mean difference between middle and rich when \(X_1\) is fixed

Model 2

-

The “Model 2” contains both main effects and interactions \[ \begin{aligned} E(Y\,\vert\, D_{1M}, D_{21}, D_{22}) &= \beta_0 + \beta_1D_{1M} + \beta_{21}D_{21} + \beta_{22} D_{22} \\[.25em] & \;\;\;\;\; + \theta_1 D_{1M} D_{21} + \theta_2 D_{1M} D_{22} \end{aligned} \]

\(\beta_0\,\rightarrow\) mean response of rich female individuals

Interpretations of other parameters are complicated!!

- The following table of expected response would help us to define parameters of “Model 2”

\[ \begin{array}{llll} \hline \text { gender } & \text { Poor } & \text { Middle } & \text { Rich } \\ \hline \text { Male } & \beta_0+\beta_1+\beta_{21}+\theta_1 & \beta_0+\beta_1+\beta_{22}+\theta_2 & \beta_0+\beta_1 \\ \text { Female } & \beta_0+\beta_{21} & \beta_0+\beta_{22} & \beta_0 \\ \hline \end{array} \]

- Interpret \(\theta\)’s

Difference of mean response between male and female among the “rich” \[ E(Y\,\vert\, \text{Male}, \text{Rich}) - E(Y\,\vert\, \text{Female}, \text{Rich}) = \beta_1 \]

Difference of mean response between male and female among the “middle” \[ E(Y\,\vert\, \text{Male}, \text{Middle}) - E(Y\,\vert\, \text{Female}, \text{Middle}) = \beta_1 + \theta_1 \]

Difference in differences (DID) \[ \begin{aligned} \Big\{E(Y\,\vert\, \text{Male}, \text{Middlw}) - E(Y\,\vert\, \text{Female}, \text{Middle})\Big\} &\;\;\;\;\\ \;\;\;-\Big\{E(Y\,\vert\, \text{Male}, \text{Rich}) - E(Y\,\vert\, \text{Female}, \text{Rich})\Big\} &= \theta_1 \end{aligned} \]

Interaction term \(\theta_1\) measures whether the effect of “gender” is the same at the levels “Rich” and “Middle”

Similarly, the interaction term \(\theta_2\) measures whether the effect of “gender” is the same at the levels “Rich” and “Poor”

In the presence of significant interactions, the main effects have no interesting interpretations

Interaction terms should not be in the model if both the corresponding main effects are not significant

Insignificant interaction terms should not be in the model

Acknowledgements

This lecture is adapted from materials created by Mahbub Latif